Climate information for the future is usually presented in the form of scenarios: plausible and consistent descriptions of future climate without probability information. This suffices for many purposes, but for the near term, say up to 2050, scenarios of emissions of greenhouse gases do not diverge much and we could work towards climate forecasts: calibrated probability distributions of the climate in the future.

Guest post by Geert Jan van Oldenborgh

(with contributions from Francisco Doblas-Reyes, Sybren Drijfhout, Ed Hawkins)

This effort in producing probabilistic projections would be a logical extension of the weather, seasonal and decadal forecasts in existence or being developed (Palmer et al, 2008). In these fields a fundamental forecast property is ‘reliability’: when the forecast probability of rain tomorrow is 60%, it should rain on 60% of all days with such a forecast. This is routinely checked: before a new model version is introduced a period in the past is re-forecast to see if this indeed holds. However, in seasonal forecasting a reliable forecast is often constructed on the basis of a multi-model ensemble, as forecast systems individually tend to be overconfident (they underestimate the actual uncertainties) (e.g. Weisheimer et al. 2011).

As the climate change signal is now emerging from the noise in many regions of the world, the verification of regional past trends in climate models has become possible. The question is whether the recent CMIP5 multi-model ensemble, interpreted as a probability forecast, is reliable.

As there is only one trend estimate per grid point, necessarily the verification has to be done spatially, over all regions of the world. The CMIP3 ensemble was analysed in this way by Räisänen (2007) and Yokohata et al (2012). In the last few months three papers have appeared that approach this question for the CMIP5 ensemble with different methodologies: Bhend and Whetton (2013), van Oldenborgh et al (2013) and Knutson et al (to appear).

All these studies reach similar conclusions. For temperature: the ensemble is reliable if one considers the full signal, but this is due to the differing global mean temperature responses (Total Global Response, TGR). When the global mean temperature trend is factored out the ensemble becomes overconfident: the spatial variability is too low. For annual mean precipitation the ensemble is also found to be overconfident. Precipitation trends in 3-month seasons have so much natural variability compared to the trends that the overconfidence is no longer visible. These conclusion match with earlier work using the Detection and Attribution framework showing that the continental-averaged temperature trends can be attributed to anthropogenic factors (e.g. Stott et al, 2003) but zonally-averaged precipitation trends are not reproduced correctly by climate models (Zhang et al, 2007).

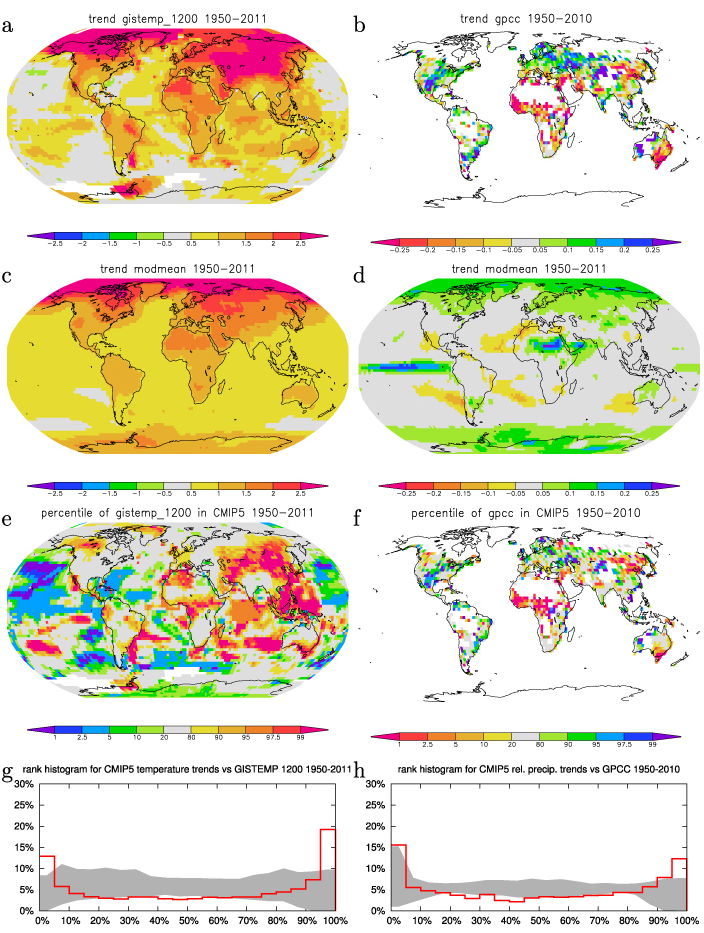

The spatial patterns for annual mean temperature and precipitation are shown in figure 1 below. The trends are defined as regressions on the modelled global mean temperature, i.e., we plot B(x,y) in

T(x,y,t) = B(x,y) Tglobal,mod(t) + η(x,y,t)

This definition excludes the TGR and minimises the noise η(x,y,t) better than a trend that is linear in time.

The conclusion that the ensemble is somewhat overconfident is based on the bottom two panels. These show that over 10%–20% of the maps the observed trends are in the top and bottom 5% of the ensemble. For a reliable ensemble this should be 5%. The deviations are larger than we obtain from the differences between the models (the grey area). On the maps above the areas where the modelled trends fall in the tails of the ensemble are coloured. In part of these areas the discrepancies are due to random weather fluctuations, but a large fraction has to be ascribed to forecast system biases. (The results do not depend strongly on the observational dataset used, with HadCRUT4.1.1.0, NCDC LOST and CRU TS 3.1 we obtain very similar figures, see the Supplementary Material of van Oldenborgh et al).

These forecast system biases can arise in three ways.

First, the models may underestimate low-frequency natural variability. Knutson et al show that natural variability in the warm pool around the Maritime Continent is indeed underestimated up to time scales of >10 years, contributing to the discrepancy there in Fig.1e. In most other regions the models have the correct or too large variability.

Another cause may be the incorrect specification of local forcings such as aerosols or land use. As an example, visibility observations suggest that aerosol loadings in Europe where higher in winter in the 1950s than assumed in CMIP5. This influences temperature via mist and fog (Vautard et al, 2009) and other mechanisms.

Finally, the model response to the changes in greenhouse gases, aerosols and other forcings may be incorrect. The trend differences in Asia and Canada are mainly in winter and could be due to problems in simulating the stable boundary layers there.

To conclude, climate models can and have been verified against observations in a property that is most important for many users: the regional trends. This verification shows that many large-scale features of climate change are being simulated correctly, but smaller-scale observed trends are in the tails of the ensemble more often than predicted by chance fluctuations. The CMIP5 multi-model ensemble can therefore not be used as a probability forecast for future climate. We have to present the useful climate information in climate model ensembles in other ways until these problems have been resolved.

UPDATE: A video abstract to go along with this paper is also available.

van Oldenborgh, G., Doblas Reyes, F., Drijfhout, S., & Hawkins, E. (2013). Reliability of regional climate model trends Environmental Research Letters, 8 (1) DOI: 10.1088/1748-9326/8/1/014055

“This verification shows that many large-scale features of climate change are being simulated correctly”

That conclusion over-reaches from the data in my opinion. Correlation is not causation. The oceanic oscillations are not taken fully into account by climate models so far as I can tell. Please see this new post on my website:

http://tallbloke.wordpress.com/2013/04/15/roger-andrews-a-new-climate-index-the-northern-multidecadal-oscillation/

Mitchell et al 2013

Revisiting the Controversial Issue of Tropical Tropospheric Temperature Trends

“Over the 1979-2008 period tropical temperature trends are not consistent with observations throughout the depth of the troposphere, and this primarily stems from a poor simulation of the surface temperature trends.”