Guest post by Piers Forster, with comments from Jonathan Gregory & Ed Hawkins

Lewis & Crok have circulated a report, published by the Global Warming Policy Foundation (GWPF), criticising the assessment of equilibrium climate sensitivity (ECS) and transient climate response (TCR) in both the AR4 and AR5 IPCC assessment reports.

Climate sensitivity remains an uncertain quantity. Nevertheless, employing the best estimates suggested by Lewis & Crok, further and significant warming is still expected out to 2100, to around 3°C above pre-industrial climate, if we continue along a business-as-usual emissions scenario (RCP 8.5), with continued warming thereafter. However, there is evidence that the methods used by Lewis & Crok result in an underestimate of projected warming.

Lewis & Crok perform their own evaluation of climate sensitivity, placing more weight on studies using “observational data” than estimates of climate sensitivity based on climate model analysis. These studies, which employ techniques developed by us over a number of years (Gregory et al., 2002; Forster and Gregory, 2006; Gregory and Forster, 2008), have proven useful analysis techniques but, as discussed in the papers, are limited by their own set of assumptions and data issues, making them not necessarily more trustworthy than other techniques. Lewis & Crok suggest a lower estimate for climate sensitivity than the IPCC, but the IPCC did not make such a value judgment about the different methods of evaluating climate sensitivity.

Here we illustrate the effect of the data quality issues and assumptions made in these “observational” approaches and demonstrate that these methods do not necessarily produce more robust estimates of climate sensitivity.

Assumptions:

Lewis & Crok make much of the fact that our techniques employ “observational data” rather than a climate model. In fact, whilst they do not use complex dynamical climate models, they always use an underlying conceptual climate model. These underlying conceptual models make very crude assumptions and capture almost none of the physical complexity of either the real-world or more complex models.

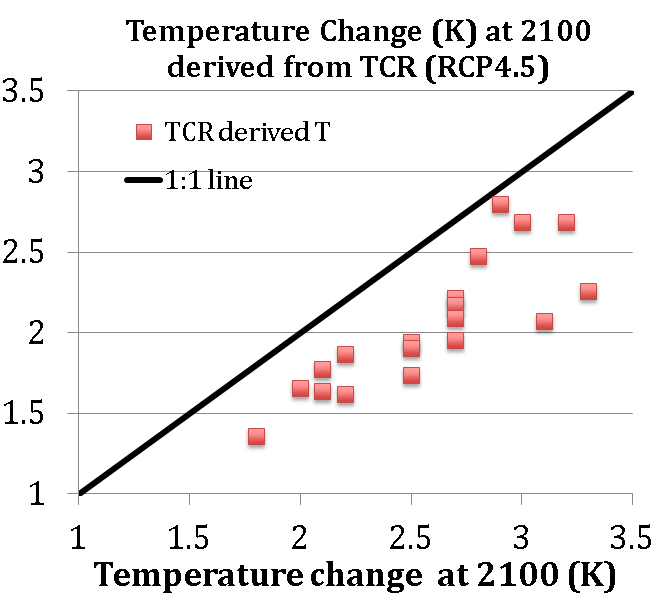

Varying the physics of these simple models such as ocean-depth, varying how data is analysed (e.g. regression methodologies), and varying how prior knowledge is factored into the overall assessment (Bayesian priors) all influences the resulting climate sensitivity (Forster and Gregory, 2006; Gregory and Forster, 2008). Particularly relevant, is our analysis in Forster et al. (2013) that confirms that the Gregory and Forster (2008) method employed in the Lewis & Crok report to make projections (by scaling TCR) leads to systematic underestimates of future temperature change (see Figure 1), especially for low emissions scenarios, as was already noted by Gregory and Forster (2008).

Observational data:

The “observational data” techniques often rely on short datasets with coverage and data quality issues (e.g. Forster and Gregory, 2006). These lead to wide uncertainty in climate sensitivity, making it hard to place a high degree of confidence in one best estimate.

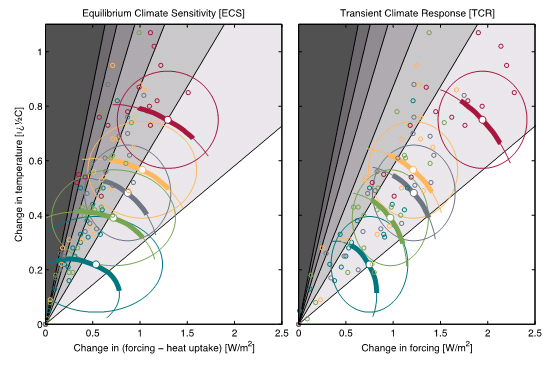

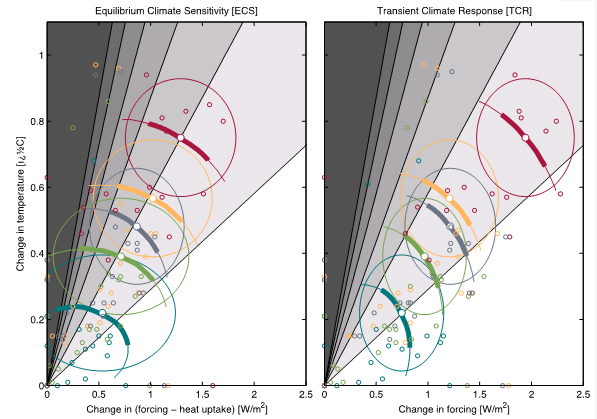

Particularly relevant for their analysis is the lack of global coverage in the observed HadCRUT4 surface temperature data record. Figure 2 compares the latest generation of CMIP5 models with the low climate sensitivity “observational data” analysis of Otto et al. (2013). In this figure the models have slightly higher climate sensitivity than suggested by the observations. However, in Figure 3, the CMIP5 models have been reanalysed using the same coverage as the surface temperature observations. In this figure, uncertainty ranges for both ECS and TCR are similar across model estimates and the observed estimates. This indicates that using HadCRUT4 to estimate climate sensitivity likely also results in a low bias.

Summary:

These are two reasons why the Lewis & Crok estimates of future warming may be biased low. Nevertheless, their methods indicate that we can expect a further 2.1°C of warming by 2081-2100 using the business-as-usual RCP 8.5 emissions scenario, much greater than the 0.8°C warming already witnessed.

References:

Forster and Gregory, 2006, J. Climate

Forster et al., 2013, J. Geophys. Res.

Gregory et al., 2002, J. Climate

Gregory and Forster, 2008, J. Geophys. Res.

Jones et al., 2013, J. Geophys. Res.

Otto et al., 2013, Nature Geoscience

One has to wonder what planet or universe you people write from.

This hastily written reply will be remembered as a sad tale of obfuscation. It does zero to resolve the lifelong problem of climate modeling, namely the inability to restrict the range of possibilities despite all the effort put in the last 35 years.

Lewis & Crok may have underestimated. That means they may have not. Their systematic problems are especially relevant for low emission scenarios. Then nobody believes there will be any lowering in emissions any time soon. Etc etc.

Ultimately, any idiot from the street can claim sensitivity is within the decades-old IPCC range. So instead of this futile and sterile PR attempts why don’t you do the right scientific thing and come up with something better than Lewis & Crok?

Maurizio – we have been doing the right scientific thing for decades by carefully sifting and assessing the science through the IPCC process, not making value judgements about which evidence to include and which to ignore.

It is great to see the GWPF accepting that business-as-usual means significant further warming is expected. Now we can move the debate to what to do about it.

cheers,

Ed.

Ed – stop circling the wagons. You weren’t there, decades ago to tell.

Back to basics. Lewis & Crok lower the old IPCC range. Furthermore, they make it narrower.

Your guest instead just argued that Lewis & Crok may be wrong, and we need stick to the old, wide, high IPCC range.

If your guest is right, climate modelling is pretty much useless and hopeless, apart from a vague feeling (utterably by “any idiot from the street…”) that things ought be warmer in the future (volcanic eruptions notwithstanding). That cannot be acceptable given all the money invested in it. This is policy-relevant stuff, not basic research.

The right thing to do then is to stop trying to answer science in five minutes on a Wednesday night and come back with a suitable riposte. Lewis & Crok challenged you modellers to provide a more useful range. Provide it, or disappear into policy oblivion.

ps further warming is expected. I am amazed you’d even think the GWPF has ever said otherwise. In what echo chamber have you been hiding for the past five years? The issue is how much, when by, at what rate, with what consequences. Thirty-five years of identical range of estimates provide zero clue about that.

“Sceptics” too often ignore the rather large accumulation of evidence from paleoclimate behaviour. For example, Rohling et al. (2012):

This issue does *not* simply hinge on GCMs-vs-“observational” estimates. However convenient it may be for contrarians to pretend that it does.

[snip – this is a scientific blog – please keep the comments scientific rather than political]

My comment was not political. Observational data should drive scientific models and not the inverse. My main point was that the last 4 IPCC reports must have had an effect to dissuade the 5th report authors from backtracking on previous dire predictions based on new flat temperature data.

Temperature has not been flat.

Ed Hawkins from his comment seems to me blissfully unaware of what to the educated layman at least appears to be the absolutely huge gap between current understanding (still pretty limited but making some progress) of climate sensitivity to CO2, and current understanding (infinitesimal by comparison) of natural as opposed to man-made drivers of global climate. Given the relative effort s put into understanding human influence (massive) and understanding natural change (tiny) this is probably unsurprising.

Hi Gillespie,

Not true – massive effort goes into understanding climate variability (e.g. see many pages on this blog) and natural forcings (e.g. read the IPCC report).

cheers,

Ed.

I’ve read way more peer-reviewed articles that deal with paleoclimate and climate changes outside of direct anthropogenic forcing than how the climate is necessarily changing now. Go here:

http://hetaylor.ca/enviro/gwnews.html#AWOGN20140316_Journals

and go through the archive. You’ll find many more articles on climate that make no mention of climate change but definitely have implications for it.

The mere fact that this response to Lewis and Crok’s paper has been hurriedly prepared makes me mightily suspicious that the Lewis Crock paper has hit a very raw nerve amongst the ‘consensus’ crew.

I have not yet had time to fully digest the Lewis/Crock paper yet since it has only just appeared but from first reading there seems little to argue with and a considerable amount to agree with. The fact that it is largely observationally based provides a credibility that is sadly lacking in the IPCC consensus claims of higher sensitivity.

John,

Lewis & Crok are part of the consensus. Their estimates are well within the IPCC range. And, yes, their method is observationally based, but it still uses a model, and as described in the post this approach is no panacea.

Ed.

More complex models do not imply greater predictive skill Mr Hawkins, in fact there is considerable evidence to suggest otherwise.

John, I agree. Simpler models are often easier to constrain

with data and have the virtue of being possible for a single person

To understand. Very well known in fluid dynamics.

So Foster is criticising his own method here? How very funny! About the only useful statement is “limited by their own set of assumptions and data issues, making them not necessarily more trustworthy than other techniques”.

Well quite! Everyone is basically guessing; some pessimistically and some optimistically. If you assume all warming from 1900 is manmade, and stick these assumptions in a model and then assume that the current unexpected plateau is not important then you will always see a high CO2 sensitivity. Alternatively if you assume, based on observations rather than the obviously inadequate climate models, that current warming is indistinguishable from the natural variation that went before 1950 – which is rather more plausible imo – then you get a CO2 sensitivity of zero degrees. These assumptions are otherwise known as Bayesian priors and the bias potential is obvious. One significant bias is that people whose livelihood depends on a high sensitivity will naturally choose the former interpretation because the latter one would put many of them out of a job. Another bias would be that if you disliked growth, cars and fossil fuel use in general then you will be more inclined to pin something on them. It has not escaped our attention that the previous global cooling scare was also blamed on fossil fuels – and wilder weather was also predicted for that cooler environemnet.

However, inherent in the more pessimistic assumptions is a corresponding very optimistic notion that it could be easy to move away from fossil fuels if we made them more expensive, therefore by our pessimistic will be doing future generations a big favour. Alas I hope it is now apparent that such is not the case: We are currently driving up the price of fuel by green taxes which causes suffering in the shorter term for no actual CO2 reduction and if the medium term causes blackouts and bankrupcy we know who to blame. Ironically the switch to shales gas, the ‘bete noir’ of environmentalists is doing more to reduce emissions than anything else. I urge you all to stop obscuring the fact that you are just making it all up – you do not know any more than any man in the street where the temperature is going and – you just pretend that you do.

No methods to derive TCR are perfect, or ever will be, including the “observational” estimates described here. The scientific thing to do is assess all the evidence from different methods, taking into account the caveats, assumptions, uncertainties from each and arrive at an overall assessment. This is what the IPCC does.

Ed.

You say that if you mask the CMIP5 models (presumably mostly the poles) so their coverage is the same as HadCRUT, the CS comes into line with the observational studies. You suggest that this indicates a cool bias in the observational studies. However, equally it could mean that the models run too hot at the poles. We already know that CMIP5 models run too hot (aerosols, the pause, Lewis/Crok Fig 3). We also note that global sea ice levels remain stubbornly around their long-term average level.

Should we not then conclude that the models are wrong?

HadCRUT4 lacks coverage in Africa, ME, Central Asia, Amazon Basin, areas of Australia *and* the poles (Cowtan & Way (2014) Coverage bias in the HadCRUT4 temperature series and its impact on recent temperature trends).

We might more readily conclude that:

– Observations are biased low

– There is no basis for the strong claim that “the models are wrong”

– Lewis’ aerosol estimate is too low (and you know this, because it has been brought to your attention by Richard Betts)

– Lewis’ estimates of ECS are biased low for these and other reasons

– You are not being sceptical enough

However, equally it could mean that the models run too hot at the poles.

There is data available for the Arctic, not included in the HadCRUT4 analysis, which indicates otherwise. Satellite data up to 85ºN, Arctic buoys and weather stations in the surrounding covered area. These data suggest rapid warming over the past thirty years and over the past century, in-line with model results.

The uncovered Antarctic region is less clear, and the models aren’t particularly consistent with each other there either.

In any case your argument is weak. We have CS estimates using observations without global coverage, and then comparisons to CMIP5 models with global coverage. Clearly this is not a like-for-like comparison so it makes sense to test how much of a difference it makes – the answer is apparently “quite a bit”. If we were to assume no information about trends in uncovered regions we can say it’s possible the models are running too hot in those areas, but it’s equally possible they’re not running hot enough – you didn’t appear to account for that possibility.

[Another relevant issue not often mentioned is that CMIP5 outputs used in these analyses are for global surface-air temperatures, whereas observations are LandSAT+OceanSST. This difference likely also produces a small cooling bias in observational trends compared to those from models.]

We already know that CMIP5 models run too hot

You seem to be engaging in a circular argument here. You “know” that CMIP5 models run hot, with the specific inference that they are too sensitive (do you mean all of them, some of them, on average?), therefore any evidence which indicates to the contrary must be wrong.

Lewis and Crok’s Figure 3 is subject to the same coverage and SST/SAT biases which have been mentioned here. Nevertheless, accounting for these biases still indicates the most recent 30-year trend is near the low end of the CMIP5 ensemble. Does that mean the models, on average, are oversensitive in a general sense? Not necessarily. Periods of around 30 years can be significantly influenced by natural internal variability (not mentioning potential forcing discrepancies at this point). This influence is apparent in the model ensemble, hence the trend variation depicted in Figure 3, albeit different models appear to produce markedly different amounts of interdecadal variance.

If you look at the bigger picture long term observed surface temperature changes appear to be clearly consistent with the model ensemble, although there is substantial variation in the latter (Note the labels indicating the steps taken to produce a like-for-like comparison, also the model data only goes up to the end of the historical run – 2005). From the same data time series for 30-year trends indicate a few occasions in the past where observed trends have gone outside the “prediction” envelope of the CMIP5 ensemble, both low and high, yet the centennial warming is still consistent.

“Periods of around 30 years can be significantly influenced by natural internal variability”.

Like, say, 1910-1940 and 1970-2000?

Too often, unspecified “natural internal variability” is invoked to explain away periods of less warming. An even-handed approach demands that natural internal variability be considered equally in periods of more warming.

Then why is GAT ~0.8C higher than it was in ~1850? Natural variability averages out over time.

BBD: “Then why is GAT ~0.8C higher than it was in ~1850? Natural variability averages out over time.”

First, I think you mean natural *unforced* variability.

Secondly, you can get shifts in operating points of complex systems, where the system shifts to a new stability point, and never moves back (until something external bangs on the system hard enough).

Even allowing this, over what time scale?

As long as you’re in the 1/f^n portion of the spectrum, it doesn’t average to zero…. this is the famous random walk pattern, where the observed variance increase with the duration of the observation period.

[Note this is not a superficial comment on the likelihood that the current warmer temperature are due to just natural variability, which I actually think is improbable.

Nor do I suggest that on sufficiently long time scales that the Earth’s system would resemble a random walk, or that temperature fluctuations would retain a 1/f^n spectrum form.]

Not necessarily (TSI) but I take your point. But like you, I don’t believe in a self-propelling climate system. Random walks in climate do not go very far before conservation of energy steps in.

“natural variability averages out over time”. Wrong. I don’t have much trouble accepting that natural internal variability is very likely to average out over time, but natural variability clearly does not average out over time. Certainly not over any meaningful timescale anyway. Otherwise there would not be ice ages, interglacials, etc, and we would not be expecting anything significant to happen in the next 5bn years.

Why is GAT ~0.8C higher than it was in ~1850? Possibly (probably?) for the same reason that it is almost certainly lower than 1,000, 2,000, 3,000 years ago : natural variability.

Unforced natural variability simply moves energy around within the climate system. It does not create energy. Therefore it cannot generate long-term trends and it averages out over time. This is not in dispute, btw, so you are on your own here.

Glacial cycles are orbitally forced, which is why Carrick added the qualifier *unforced* natural variability above. You are getting muddled up, but I have to take some of the blame for writing sloppily.

These claims are contradicted by the evidence. See eg.PAGES2K (2012):

The evidence suggests that the last decade is probably hotter than any time since the end of the Holocene Climatic Optimum ~6ka (Marcott et al. 2013). The HCO was orbitally forced, so there’s no mystery as to why it was warmer then. Nor is there any mystery as to why it is getting so warm now.

“However, equally it could mean that the models run too hot at the poles.”

Andy, that assertion would be sad if it weren’t so funny. In the real world, the models demonstrably run cold relative to the poles. Perhaps worse is that they can’t manage a transition from current to mid-Pliocene-like conditions (in equilibrium with *current* CO2 levels).

Ed,

That’s very interesting. I had wondered if this had ever been done as it seemed an obvious test. Has anyone done a comparison between an Otto et al. analysis using Cowtan & Way with the ECS model estimates with global coverage?

Not that I know of. My guess is that this would increase the estimates of TCR, but it would be interesting to find out. Maybe Piers could do it?!

Ed.

Great idea, I’ve been thinking we should do it with lots of datasets in fact including satellite data tropospheric temperatures and reanalyses. I was really surprised masking made so much difference, but, just as you say in your blog, adding a few years of data also makes a big difference, which really adds to the evidence that these techniques are not that robust

Just out of interest, does anyone have any comments about this result from Lewis (2013)

My understanding is that the first result is obtained using data from 1945-1998 (I think). This gives an ECS of 2-3.6K. Adding 6 years of unused model data (which I understand takes the time interval to 2001) changes this to 1.2-2.2K. That seems like a remarkable change given that it’s only an increase of about 12% in data. Wouldn’t one expect a method that estimates something like the ECS to be reasonably insensitive to relatively small changes in the time interval considered. Or, have I misunderstood what Lewis (2013) has done here.

I agree. This is potentially a problem with using the observational period to derive ECS. Maybe TCR, which is the most relevant quantity for projecting warming over the coming decades, is less sensitive?

Ed.

My understanding is that in Lewis (2013) the comparison is done with models that have different ECS, Aerosol forcings and ocean diffusivity. The method then determines which models produce the best fit to the observations. So – if I’ve got this right – Lewis (2013) cannot estimate the TCR because I don’t think that was one of the model variables. Of course, I guess each model should have a TCR value, but I can’t find any mention of this in Lewis (2013). Given that this is based (I think) on Pier’s earlier work, he could comment more knowledgeably than I can.

Apologies, I think I may have just confused Forest with Forster 🙂

The point of the Lewis 2013 study was to recalculate Forest et al 2006 using an objective prior rather than the uniform prior in ECS used by Forest. The shift in the long tail is due to that rather than up to date data.

In the report, Lewis/Crok note of the Otto et al study:

“In fact, best ECS estimates based on data for just the 1980s and just the 1990s are very similar to those based on data for 1970–2009, which demonstrates the robustness of the energy budget method.”

One should distinguish between Lewis (2013) and Otto et al. (2013). In Lewis (2013) the result when using the same time period as Forest et al. (2006) has quite a large overlap [2.1 – 8.9K compared to 2.0 – 3.6K]. Adding 6 years of new data, changes the ECS range to 1.0 – 2.2K. So, yes if you use the same time interval as Forest et al. (2006) you can reduce the uncertainty in the high end, but why would one not be concerned by the significant change in the estimate when adding only 6 years more data?

Your quote appears to be based on the Otto et al . work, not Lewis (2013). I agree that Energy budget constraints are quite useful, but I think many would argue that they do suffer from various issues related to regional variations in ocean heat uptake, uncertainties in aerosol forcing, missing coverage in the temperature datasets. If one assumes that the recent Cowtan & Way result has merit, then that would increase the ECS and TCR estimates from Otto et al. by a few tenths of a degree.

Having referred to the Lewis 2013 paper (a draft – struggling to find the final version) he says:

“We resolve these issues by employing only sfc and do diagnostics, revising these to use longer diagnostic periods, taking advantage of previously unused post 1995 model simulation data and correctly matching model-simulation and observational data periods (mismatched by nine months in the F06 sfc diagnostic). ”

So it’s an awful lot more than just using an extra 6 years of data.

But isn’t part of that the improved method? Hence they can reduce the uncertainty in the high-end when considering the same time interval as Forest et al. (2006) but, for some reason, the range changes dramatically when the improved method is applied to 6 years of unused model data. If the model was robust, I’d would expect that it wouldn’t be that sensitive to a small increase in data, as seems to be the case.

I don’t think so. 2.0 – 3.6K involves correcting method only. 1.0 – 2.2K is based on fixing the data too.

The abstract seems to imply that it does, but having read the paper a bit more in the last hour or so, there may be more to it than simply 6 years more data. I don’t think, however, that that changes the point I was getting at much. One would hope that such a method would be reasonably insensitive to relatively small changes in some assumptions.

Okay, so I’ve had another look. The paper actually says

My understanding is that the Forest et al. (2006) used a 5-decade to 1995 temperature dataset, a 37-year deep ocean dataset (from Levitus), and used an upper atmosphere diagnsotic. When Lewis’s improved method was used to compare with Forest et al. (2006) they found that the results didn’t depend on the upper atmospheric diagnostic. Hence, when they added the 6 years of extra data (which is then referred to as a 6-decade to 2001 dataset) the upper atmosphere diagnostic was not included and the deep ocean diagnostic was extended from 37 years to 40 years (i.e, till 1998). I would argue that that is a relatively small change to the assumptions that seems to have produced quite a dramatic change in the ECS estimate.

I also found this in the paper:

“Our 90% range of 1.2–2.2 K for Seq using the preferred revised diagnostics and the new method appears low in relation to that range, partly because uncertainty in non-aerosol forcings and surface temperature measurements is ignored. Incorporation of such uncertainties is estimated to increase the Seq range to 1.0–3.0 K, with the median unchanged (see SI). “

Yes, I also found that, but I still don’t think that quite removes the issue that it appears that a relatively small change in the data used makes quite a substantial change to the estimated ECS range.

The difference seems to be explained by Nic Lewis in this article by the Register today where he talks about priors

http://www.theregister.co.uk/2014/03/06/global_warming_real_just_not_as_scary_as/?page=3

Libardoni and Forest released a correction recently which showed that the impact of the timing mismatch was negligible. Apparently the other change in method was removal of information about the vertical structure of temperature changes. I’d be interested in a break-down of what proportional impact Nic Lewis believes each of these had.

To speculate, with no real information, on the difference due to adding six years more data: The end of Forest’s timeline coincides with the Pinatubo eruption. We know that climate models tend to overestimate the impact of Pinatubo, in large part due to coincident El Niño activity. If this mismatch due to volcanic activity occurs in the comparison between Forest’s model runs and observations it becomes easier for higher sensitivity parameters to match observations due to a volcanic-induced cool-bias. Running on to 2001 would remove the impact of the volcanic mismatch.

Ed, it has always been what are we going to do about the warming, their would be no global warming/climate change debate if we weren’t being asked to “do something about it”. Moreover, the whole history of this charade has swirled about the notion that the “science is settled so let’s get on with reducing CO2 emissions, no more arguments because it’s too late.” Evidenced by the rush to prove climate sensitivity is high, clearly a key plank in the “let’s get on with it” camp’s arguments.

So as you say let’s get on to solving the problem and start by asking ourselves will it be feasible to reduce CO2 emissions at all given that we’re not remotely in control of the growth? And then, if we can we should consider whether alternate forms of energy will be available in a time scale that will mean the reduction of CO2 emissions will not impact adversely on the people alive today and for the next few generations. Then we should decide. Our policies should not be driven by a minority of environmental scaremongers but by the desire to understand what it means to trade hard times now for unknown hard times in the future.

[comment slightly snipped to remove political insinuations]

Piers Forster

Hi Piers, I’ve just seen your comments on my and Marcel Crok’s report, Piers. In your haste you seem to have got various things factually wrong. You cliam that the warming projections in our report may be biased low, citing two particular reasons. First:

” the Gregory and Forster (2008) method employed in the Lewis & Crok report to make projections (by scaling TCR) leads to systematic underestimates of future temperature change”

I spent weeks trying to explain to Myles Allen, following my written submission to the parliamentary Energy and Climate Change Committee, that I did not use for my projections the unscientific ‘kappa’ method used in Gregory and Forster (2008).

Unlike you and Jonathan Gregory, I allow for ‘warming-in-the-pipeline’ emerging over the projection period. Myles prefers to use a 2-box model, as also used by the IPCC, rather than my method. His oral evidence to the ECCC included reference to projections he had provided to them that used a 2-box model.

I agree that the more sophisticated 2-box model method is preferable in principle for strong mitigation scenarios, particularly RCP2.6. If you took the trouble to read our full report, you would see that I had also computed forcing projections using a 2-box. The results were almost identical to those using my simple TCR based method – in fact slightly lower.

So your criticisms on this point are baseless.

Secondly, you say:

“in Figure 3, the CMIP5 models have been reanalysed using the same coverage as the surface temperature observations. In this figure, uncertainty ranges for both ECS and TCR are similar across model estimates and the observed estimates. This indicates that using HadCRUT4 to estimate climate sensitivity likely also results in a low bias.”

I have found that substituting data from the NASA/GISS or NOAA/MLOST global mean surface temperature records (which do infill missing data areas insofar as they conclude is justifiable) from their start dates makes virtually difference to energy budget ECS and TCR estimates. So your conclusion is wrong.

Perhaps what your figure actually shows is more what we show in our Fig.8, That is, most CMIP5 models project significantly higher warming than their TCR values imply, even allowing for warming-in-the-pipeline.

Nic, I have a physicsy question for you. If one assumes a surface emissivity of around 0.6, one can show that a rise of 0.85K since pre-industrial times produces an increase in outgoing flux of about 2.8 W/m^2. We still have an energy imbalance (average over the last decade) of around 0.6 W/m^2. To reach energy equilibrium the outgoing flux would have to increase by this amount. The estimates for the net anthropogenic forcing used in Otto et al. (for example) are around 2 W/m^2. So, unless I’ve made some silly mistake, that would suggest that feedbacks are providing a radiative forcing that’s something like 60% of the anthropogenic forcing.

Now if I consider your RCP8.5 estimate which suggests that surface temperatures would rise by 2.9K relative to 1850-1900, that would be associated with an increase in outgoing flux of around 9.6 W/m^2. RCP8.5 is associated with an increase in anthropogenic forcings of 8.5W/m^2 (I think). Now, if today’s estimates are anything to go by, we might expect feedbacks to be producing radiative forcings that are (at least?) 60% of the anthropogenic forcings. That would mean a total change in radiative forcing of around 13.6 W/m^2. If this is a valid way to look at this, your estimate would suggest an energy imbalance, in 2100, of 4W/m^2. Is there any evidence to suggest that we could ever really be in a position where we could have that kind of energy imbalance? I don’t know the answer, but my guess might be that it would be unlikely.

Anders,

Typically feedbacks are accounted for by adjusting the radiative restoration strength (F_2xco2 / T_2xco2) away from the Planck response, rather than applying them to the radiative forcing. A radiative restoration strength of 2 W/m^2/K is associated with an effective sensitivity of ~1.9 K, which is close to what (I believe) Lewis uses in the 2-box model (the difference between 3.3 W/m^2/K and 2 W/m^2/K would imply positive feedbacks of ~ 1.3 W/m^2/K). This would mean that outgoing radiation would increase by 2 W/m^2/K * 2.9 K = 5.8 W/m^2 as a result of the surface temperature increase of 2.9 K. So you would actually have an energy imbalance of ~ 2.7 W/m^2 rather than 4 W/m^2 in 2100 if you used your 8.5 W/m^2 for the RCP8.5 scenario. If you use the adjusted forcing from Forster et al. (2013) for RCP8.5, this leaves an imbalance of ~ 2.0 W/m^2. Still rather large, but to avoid this, the ocean heat uptake efficiency would have to decrease as the imbalance increases…do you know of any reason why this would occur?

Troy,

I’m going to have to think about what you’ve said. I was just using F = eps sigma (T1^4 – T2^4) with eps = 0.6 to determine the change in outgoing flux if temperature increases by (T1 – T2). You may be right, though. I’ll have to give it more though in the morning. I guess what I was getting at, though, was that even a modest feedback response would seem to imply that RCP8.5 leading to a 2.9K increase by 2100 relative to 1850-1900, would seem to suggest quite a substantial energy imbalance in 2100. I don’t actually know what sort of energy imbalance we can actually sustain, but if the surface warming is associated with a few percent of the energy imbalance, even 2 W/m^2 would suggest a surface warming trend 4 times greater than we have today.

Troy,

Okay, yes, I was a little inaccurate in my quick calculation. I get roughly the same numbers as you do if I attempt to be a little more precise.

I have read your report and think your model is essentially still based on TCR scaling with an extra unphysical fudge factor added. Your logic doesn’t make sense for my conclusion being wrong in the second part – the data in the figures show it

Piers Forster,

I think it would be helpful for many of us if you expanded on your points of agreement / disagreement given the last comment. Do both of you agree that…

1) The Lewis and Crok approach for predicting temperatures based on TCR is not exactly the same as Gregory and Forster (2008), primarily because an additional value is added (0.15K) for heat “in the pipeline”. Whether this method is *substantially* different from GF08 may be up for debate, as is whether this is an improvement, but the resulting projected value is modified. A 2-box model would be preferred, but this projection would then depend on other properties of the 2-box model as well.

2) Regardless, using this method of projection will tend to underestimate the temperature in 2100 for the RCP4.5 scenario for models, though perhaps not to the degree shown in Fig. 1 above if the “in the pipeline” warming is accounted for. It seems to me this is most likely to result from 3 factors: a) changing ocean heat uptake efficiency (ratio of change in ocean heat content to surface temperature increase) in models, b) changing “effective” sensitivity (the radiative response per degree of surface temperature) in models , or c) models generally have a higher effective sensitivity / TCR ratio than used by the 2-box in Lewis and Crok. What reason(s) would either of you suggest is/are most likely?

Now, one point of disagreement seems to be…

3) Does the model / observation discrepancy in TCR (in Otto et al) primarily arise from the coverage bias in HadCRUTv4? Piers, you seem to suggest that this is indeed the case, although I confess I find it hard to sufficiently read the difference in Fig2 & Fig3 to see this. Nic, you argue that this is not the case.

I also had one more question about Fig 3 above, which, as I mentioned above, I am having some trouble reading/understanding. The little red circles in the graph represent the difference between pre-industrial and the 2000s for the CMIP5 models, correct? My confusion arises because it appears, according to the figure, that most of the models have a surface temperature change of only 0.4-0.6 K over that period. Am I reading that correctly? Even the unmasked (fig 2) observations show 4 models in that range.

Hi Troy, sorry if I was rather terse earlier. You’ve interpreted the comments very effectively though.

1) Yes, Nic adds a 0.15K for heat in the pipeline and as far as I can tell. He then adds this onto the temperature change your get from the Gregory and Forster 2008 resistivity approach. I guess perhaps I overstated this idea being unphysical, as Nic is right that some forcing response is in the pipeline. But why choose 0.15K for this? No one really knows what the current energy imbalance or committed warming is. Hansen papers suggest a large energy imbalance around 0.8 Wm-2, which would give a very large estimate of warming in the pipeline, for example.

The reason why I said this was unphysical as it is not related to the same mechanism or same magnitude, as why you see the underestimate in Figure 1. It may, by chance, correct this underestimate, but it would be for the wrong reason.

We think the mechanism behind fig 1 is that scenarios with rapid forcing change set up a bigger gradient of temperature in the ocean which more effectively transfers heat downwards, so has lower temp change per unit forcing.

2) Mechanism is above. However, Nic’s pipeline factor may correct the underestimate, or it may not. I don’t think we have anyway of knowing. To me this gets to the nub of my point on assumptions. I’m not really trying to say Nic’s results are wrong. I’m just trying to show that model and assumption choices have a huge effect as there is no perfect “correct” analysis method. Not Forster and Gregory (2008) or Lewis (2013), both can be legitimately criticized

3) I don’t think Fig 3 shows categorically that HadCRUT4 derived estimates definitely have a low bias but I think it shows clearly that coverage could be an issue. Again this is the point I was trying to make. There is a reason why the Lewis esimate might be low, not that it is low. Not sure if answered question here though…

The graphs didn’t come out perfectly. But temp change is from 1900 I think, I can check tomorrow. I made them fast but numbers don’t look completely wrong compared to Forster et al. (2013) table 3. Some models have really large aerosol forcing and very little T change

Hi Piers

Thanks for elaborating. A few comments and questions on what you say.

1) I’ve never considered my simplified method of computing projections as using the Gregory and Forster 2008 resistivity approach from preindustrial. It wouldn’t have occurred to me to do use what is IMO an obviously unphysical method, and I hadn’t looked at that paper for a long time. Rather, I see my simplified method as a application of the generic definition of TCR in Section 10.8.1 of AR5 WGI, given that the period from 2012 to 2081-2100 is an approximately 70-year timescale and on the higher RCP scenarios there is a gradual increase in forcing over that period. That is a less good approximation for RCP4.5 and not the case for RCP2.6, but in fact that makes little difference.

Clearly, as the climate system is not in equilibrium one needs to allow for ‘warming in the pipeline’ that can be expected to emerge from 2102 to 2081-2100. Provided it is a realistic figure, that is no more an ‘unphysical fudge factor’ than the treatment of existing disequilibrium in the 2-box model that Myles Allen provided to the Energy & Climate Change Committee, and as also used by the IPCC. I think you now realise that.

My 0.15 K addition for this factor was a careful estimate. It is actually conservative compared to what a 2-box model with realistic ocean mixed layer and total depths, fitted to the ECS and TCR best estimates in Marcel Crok’s and my report, implies. I calculated our warming projections using both my simplified and a 2-box model; the results were in line for all scenarios, as stated in a footnote to our report. However, many readers won’t know what a 2-box model is, but are more likely to follow our applying the generic TCR definition and adding an allowance for warming in the pipeline, so we justified and explained that method in the text.

I think one should place more trust in observations than in Hansen’s estimate. The latest paper on ocean heat uptake (Lyman and Johnson, 2014, J. Clim) shows warming down to 1800 m during the well observed 2004-11 period that, when added to the AR5 estimates of other components of recent heat uptake, equates to under 0.5 W/m2. With our 1.75 K ECS best estimate, that would eventually give rise to surface warming of <0.24 K. Given the very long time constants involved, less that 0.15 K of that should emerge by 2081-2100.

You say "We think the mechanism behind fig 1 is that scenarios with rapid forcing change set up a bigger gradient of temperature in the ocean which more effectively transfers heat downwards, so has lower temp change per unit forcing."

If that were correct, one would find that the ratio of future warming excluding emerging warming-in-the-pipeline to future forcing change declined from RCP4.5 to RCP6.0 to RCP8.5. That doesn't appear to be so. I'd be interested to discuss the reasons with you directly.

FYI, whilst Gregory & Forster 2008 uses the term 'ocean heat uptake efficiency' for its kappa parameter, I use the term in a different and more general physical sense.

3) In your Fig.3 (which in my haste I confused with Fig. 1 when I wrote before, so my second comment was misplaced), have you used the intersection of the 1860-79 base period (or 1900) and the final period HadCRUT4 coverage to mask the CMIP5 projections, or just the final period?

In any case your figures compare model temperature changes with individual model forcing estimates that (assuming they are from Forster et al, 2013, JGR) are themselves derived from the same model temperature changes, if I recall correctly. I'm not sure it makes sense to do that rather than to use a common reference forcing change for all models (from the RCP or the AR5 forcing datasets).

Nic – although it’s somewhat peripheral to the main point above, I’m troubled by what strikes me as a misinterpretation on your part of OHC uptake as reported by Lyman and Johsnon (2014), since underestimating this phenomenon can lead to underestimates of other important values, including effective climate sensitivity. How do you arrive at the conclusion that these authors find uptake down to 1800 m to be “under 0.5 W/m2”? In Table ! of the version I’m looking at, the figure is given as 0.56 W/m2 “reported as heat flux applied to Earth’s entire surface area”.

Sometimes in an eagerness to find evidence supporting our views, we read what we want to see in the data rather than what’s there. Did you do that here, and also in your testimony to the UK Parliament, or are you recalculating the reported values in some way you don’t specify? Of course, maybe that’s what I’m doing, and if so, I’m sure you’ll point it out.

Fred, yes I also see a value of 0.56W/m^2 and, as I understand it, is based on the OHC data for the period 2004-2011 but is then presented as an average across the whole globe. From the perspective of energy budget estimates, presumably this is also slightly lower than what Otto et al. (2013) call the change in Earth system heat content, which includes oceans, continents, ice and atmosphere.

Fred Moolten says: March 7, 2014 at 3:58 pm

“Nic – although it’s somewhat peripheral to the main point above, I’m troubled by what strikes me as a misinterpretation on your part of OHC uptake as reported by Lyman and Johnson (2014), since underestimating this phenomenon can lead to underestimates of other important values, including effective climate sensitivity. How do you arrive at the conclusion that these authors find uptake down to 1800 m to be “under 0.5 W/m2″? In Table ! of the version I’m looking at, the figure is given as 0.56 W/m2 “reported as heat flux applied to Earth’s entire surface area”.

Sometimes in an eagerness to find evidence supporting our views, we read what we want to see in the data rather than what’s there. Did you do that here, and also in your testimony to the UK Parliament.”

And Then There’s Physics says: March 7, 2014 at 4:26 pm

“Fred, yes I also see a value of 0.56W/m^2 and, as I understand it, is based on the OHC data for the period 2004-2011 but is then presented as an average across the whole globe.”

Thanks, Fred and And Then There’s Physics (pity you’re afraid to identify yourself, though).

Fred’s statement “Sometimes in an eagerness to find evidence supporting our views, we read what we want to see in the data rather than what’s there” is spot on. That’s what you’ve both done, and probably what Lyman, Johnson, and all the much-vaunted peer reviewers of this paper also did.

If you actually look at the (graphical) data in Lyman and Johnson (2014), it is obvious that for their main results REP OHCA estimate ,the 2004-11 change for 0-1800 m averaged over the globe is no more than 0.30 W/m2, notwithstanding that the figure given in the main results table is different.

This sort of error doesn’t surprise me, I’m afraid – we all make mistakes. I’ve found major errors or deficiencies in quite a few peer reviewed climate science papers. Why are people like you so trusting of claims in climate science papers that are in line with the consensus, when they will almost certainly have undergone a far less probing peer review process than claims that disagree with the consensus?

Nic,

Why does it matter?

I’ll grant you that the REP line in Fig. 4 does seem inconsistent with 0.56 W/m^2 averaged over the globe. Maybe someone else here understands the discrepancy.

Why do some people think comments like this are a good way in which to engage in a scientific discussion? Simply pointing out the apparent discrepancy might have been sufficient.

Nic – given the explicit and repeated statement in Lyman and Johnson indicating a value of 0.56 W/m2, I suspect you are probably wrong in basing your disagreement on a hard-t0-read Figure 4 that may have been drawn slightly inaccurately. I am more certain you are wrong in dogmatically rejecting the 0.56 value without recourse to the raw data or information from the authors, and in castigating the authors and reviewers (never mind me) for what may have been too quick an impulse on your part to resolve a discrepancy in favor of a view you favor. This is admittedly a tendency we all need to resist, but I’ve noted it in previous conclusions you’ve drawn regarding climate sensitivity and the non-linearity of the temperature/feedback response. In any case, your assertion that the 1800 m value can be no more than 0.30 W/m2 is unjustified. At best, you can claim it might be true, but not that it must be true. You should reconsider that assertion.

In fairness to Nic, it has been pointed out to me – on my blog – that Table 1 in the published version of the paper is quite different to that in the final draft. The value quoted for 2004 – 2011 (0 – 1800 m) in the published version is indeed 0.29 W/m^2.

Thanks, And Then There’s Physics. I was quite careful to repeat the information from what I stated was the version I was looking at (a draft accepted for publication). If that was changed in the published version, the discrepancy disappears. There is still a need for all of us, I believe, to avoid resolving discrepancies according to our wishes rather than waiting for definitive information. It’s also clear that Nic made a serious error in casting blame on authors and reviewers when no such blame was warranted. As far as I call, no-one else in this exchange of comments has made erroneous claims.

Fred Molten writes:

“. At best, you can claim it might be true, but not that it must be true. You should reconsider that assertion.”

I disagree, Fred. I knew for a fact that what I said about the trends in the accepted, peer reviewed version of the paper was true. John Lyman had confirmed his mistake when I pointed out to him that the regression slopes given in his stated results didn’t agree to the data shown in his graphs.

You also say:

” It’s also clear that Nic made a serious error in casting blame on authors and reviewers when no such blame was warranted. As far as I call, no-one else in this exchange of comments has made erroneous claims.”

I didn’t cast blame on them – I specifically excused them from blame, saying “we all make mistakes”. Are you suggesting that the authors and peer reviewers didn’t make any mistakes? But I will amend my statement “That’s what you’ve both done, and probably what Lyman, Johnson, and all the much-vaunted peer reviewers of this paper also did. ” to indicate uncertainty / a greater degree of uncertainty as to whether that was the, or one of the, reasons for none of these individuals seeing what the graphical data showed. In the authors’/ peer reviwers’ case at least, it’s also IMO more a question of seeing what they expected to see in the data rather than what they wanted to see. BTW, the data is quite clear in the graphs in the accepted version – you just need to zoom in on them, as I did when originally digitising the graphs to confirm what use of a ruler showed.

Fred,

FWIW, you may want to check out the acknowledgements in the published / final version (p1954): “Nicholas Lewis pointed out an

error in the accepted version as well.”

Thank you, Nic, for amending your earlier statement. At this point, it’s best not to waste more time on this. Readers can make their own judgments and probably don’t care much about how the final understanding of the facts came to be agreed on as long as the agreement came about.

Fred, you say “as long as the agreement came about”. Does this mean you concede that Nic was correct all along in his use of OHC uptake as reported by Lyman and Johnson and you were mistaken in saying “your assertion that the 1800 m value can be no more than 0.30 W/m2 is unjustified”? I have not seen you acknowledge this and I think it would be useful for readers to understand exactly what the facts are that you say have now been agreed on.

As an aside, I hope you will avoid language in the future like: “Did you do that here, and also in your testimony to the UK Parliament, or are you recalculating the reported values in some way you don’t specify?” Adding this provocative wording to a legitimate question was unnecessary and you should be particularly careful when not working from the final published paper.

Nic Lewis –

The subtitle of your report suggests that you believe the IPCC – presumably including specific experts here – “hid” your favoured results. As opposed to the more mundane explanation (also easily demonstrated fact) that plenty of experts are less convinced by them than you are.

Why the routine assumption of bad faith?

If the response here had been titled “How the GWPF/Lewis & Crok hid the bad news on global warming” would you consider it a reasonable title?

JamesG: “So Foster is criticising his own method here? How very funny!”

Every decent researcher/scientist knows the importance of being self-critical, understanding the strengths and weaknesses of one’s own methods and how they compare to others. That’s a fantastically telling comment.

Paul S – as others pointed out the “natural variability” excuse is just that.

Periods of around 30 years can be significantly influenced by natural internal variability (not mentioning potential forcing discrepancies at this point).

Natural variability is supposed to average itself out (see BBD). Therefore, if it contributes to some discrepancy over, say, 30 years, it means that its averaging out is on a longer basis than 30 years.

This means one would have to expect around 60 years to find out if the models are running too hot. But then there is nothing magical about 60 years either. You could turn up in 2044 and say “Periods of around 60 years can be significantly influenced by natural internal variability (not mentioning potential forcing discrepancies at this point).”

This would move the minimum observation window to 120 years. I hope we will all be here in 2074 to read your immortal words “Periods of around 90 years can be significantly influenced by natural internal variability (not mentioning potential forcing discrepancies at this point).” – and so on and so forth.

Back to basics. Models that cannot account for multidecadal natural variability cannot provide any indication at a level more granular than several decades, and therefore are completely useless policy-wise.

I think one should bear in mind that the influence of natural variability would – I think – be expected to be quite different in a world with and without anthropogenic forcings. If the system were in equilibrium, then internal variability could produce variations in the surface temperature (for example) but the low heat capacity of the atmosphere would mean that the system should return to equilibrium relatively quickly (I’m assuming here that internal variability doesn’t produce a change in forcing. I also realise that the timescale would also depend on how this variability has influenced the OHC).

On the other hand, if anthropogenic forcings have moved the system out of equilibrium (as the evidence suggests they have) then natural variability can act to change the rate at which the system returns to equilibrium (the surface temperature trends at least) but that doesn’t really imply that somehow the warming is not anthropogenic.

What I’m really saying is that it’s very hard for internal variability to produce some kind of long-term warming trend because (in the absence of some kind of change in forcing) the energy associated with an increase in surface temperature should be lost on a relatively short timescale (months or a few years).

I do realise that I’m commenting on an actual climate scientist’s blog here, so am happy to be corrected by those who are likely to know more than I do 🙂

Anders – if you can’t stand corrections unless they come from climate scientists, you better leave them do the talking.

You have not addressed the point about timescales. What is “long-term” and why?

Maurizo,

I don’t think that’s a fair assessment. I get corrected by many people who aren’t climate scientists. Just not by everyone who isn’t a climate scientist 🙂

I did give you a timescale. In the absence of a change in forcing one could show that the atmosphere should lose any excess energy in a matter of months or years. It’s not that hard to estimate. Mass of atmosphere 5 x 10^18 kg. Heat capacity 1000 J/kg/K. Change the temperature by some amount and the energy goes up. Determine the increase in outgoing flux. etc.

That’s not really the point though. If anthropogenic forcings have resulted in an energy imbalance, then internal variability can change the rate at which the surface warms, but that it is warming is entirely anthropogenic.

Sure, but what about if we consider a natural change in forcing? Can internal variability of the Earth change the location of the climate attractor (i.e, “internal variability” is not including changes in solar, orbital, or volcanic, but just oceans/atmo/biosphere/ice/etc)?

This is an area of curiosity for me.. and I admit to knowing almost nothing about what the literature says.

The atmosphere is too transient to sustain anything which could be considered a forcing in those terms. The other things you mention are possible but they would tend to become significant on centennial to millennial scales. Climate scientists I’ve listened to have, on a few occasions, proposed a sweetspot for climate prediction of about 40-years. Less than that and short-term weather variability can be significant, much longer than that and you start to run into another order of difficult to predict system dynamics such as the ones you list.

Shaun Lovejoy has some interesting ideas in that area: http://www.earth-syst-dynam.net/4/439/2013/esd-4-439-2013.html

Windchaser,

I’m well outside my comfort zone now, but I believe there are Dansgaard–Oeschger events which occurred during the last glacial cycle. One explanation for these is that they are a consequence of unforced variability. I don’t understand the process very well, but it’s associated – I think – with some sort of ice sheet instability. So, I guess there is some evidence for internal variability producing a change in forcing but, as PaulS says, if it does exist, it’s typically associated with centennial/millennial timescales.

I’ve heard for years that the entire twentieth century warming could be natural variability but suddenly natural variability potentially causing a difference in warming trend on the order of a few hundredths of a degree per decade over thirty years is nothing more than ‘an excuse’.

The simple point is this: Take a look at my plot of historical observational and modelled 30-year trends. The observations spend very little time near the middle of the ensemble envelope – why would we expect them to do so for the most recent 30-year period?

“Particularly relevant for their analysis is the lack of global coverage in the observed HadCRUT4 surface temperature data record. ”

Could you elaborate? How do you think the lack of global coverage impacts the results?

you could always use a more complete surface record. If you did, how would you expect it to change the results?

Ed and all – is there ANY surface record one could use that would be actual observational data?

Isn’t it simply that the models are global while the surface temperature datasets don’t have complete coverage. If the warming isn’t the same everywhere (as we expect given polar amplification) then comparing models that have global coverage with temperature datasets that don’t, can produce mismatches that are a consequence of the different coverage, rather than a consequence of some fundamental different between the models and the observations.

I dont’ think the paleo records show much (or any) amplification in the Antarctic interior. The north polar corresponds to perhaps 2.5% of the surface area of the Earth.

I agree there is a bias with missing this region (if it really shows an increased trend), but the best I can get is about a 15% effect on long-term trend, using realistic upper limits on polar amplification in the Arctic Sea.

That’s enough to explain the discrepancy between HadCRUT and GISTEMP, but not nearly enough to explain the discrepancy between warming trend of measurements and models.

Steven, good question. The reason why you really need truly global estimates of surface T, heat uptake and forcing iss that these energy budget analyses rely on energy being conserved and if analysis isn’t global, energy could be leaking out the sides. i.e. The forcing could be heating the Arctic or Africa. You might expect as the poles are missing in HAdCRUT4 that a true global average trend from a global version of HadCRUT4 would show larger trends – (e.g. Cowtan and Way, 2014). But Nic Lewis is right about other global datasets not showing greater trends than the HadCRUT4 data, so I think the jury is still out here

Hello Dr. Forster,

“You might expect as the poles are missing in HAdCRUT4 that a true global average trend from a global version of HadCRUT4 would show larger trends – (e.g. Cowtan and Way, 2014). But Nic Lewis is right about other global datasets not showing greater trends than the HadCRUT4 data, so I think the jury is still out here”

On our project website we have provided a range of reconstructions based on different input datasets and what has become clear is that the discrepancies between our trends and some of the other datasets (for example NOAA and GISS) is related to issues of coverage (GHCNv3 vs CruTempv4) and different SST sources. When we reconstruct using GHCNv3 as a base instead of Crutempv4 we get slightly lower global temperature trends because it has much less high latitude coverage over the past decade.

We are currently working on trying to reconcile the differences between our dataset and GISS with at least some of the differences explained by the reasons above. As for NOAA they infill at low to mid latitudes but have less high latitude coverage therefore coverage bias is an issue for them as well. We did not use NOAA as a base (instead used HadCRUTv4) in the paper because NOAA does not include the most recent SST correction and because they infill in the areas mentioned above.

See this document:

http://www-users.york.ac.uk/~kdc3/papers/coverage2013/update.140205.pdf

Figure U1 and Table U2

Global temperatures have supposedly gone up by 0.8C since atmospheric CO2 was 280ppm. CO2 is now around 400ppm or an increase of around 43%. The effect of CO2 is supposedly logarithmic so a climate sensitivity around 1.75C for doubling of CO2 is not unrealistic (in fact possibly too high). Anything above has no foundation in reality. Where is the temperature accelerator after 17 years of “pause”? Just the opinion of a layman who has studied all the “learned” contributions above. In particular I find this bizarre:

Ed Hawkins says:

March 6, 2014 at 11:09 am

“….I agree. This is potentially a problem with using the observational period to derive ECS.”

I am sorry but those who think that models better reflect reality than observations ought to go back to school. How can you create any model without first having studied the real world? This is all together too “lofty”.

John Peter, what you’re doing is looking at the Transient Climate Response (TCR), not the equilibrium climate sensitivity (ECS). The TCR tells us about the short-term warming effects of CO2, and your guess of ~1.75 C/doubling corresponds not-too-badly to an ECS of 3C, which is the mean IPCC estimate.

About the difference between TCR and ECS: because each bit of CO2 we add just helps the Earth retain some extra heat each year, most of the effect of CO2 takes decades to manifest – if I recall correctly, it’ll take 30-60 years to see *half* the effect of the CO2 that we’re emitting now. The point is, there’s a lot of warming left in the pipeline for that 400 ppm, particularly since most of the increase in CO2 came in the last few decades.

Nic Lewis’s work, while biased towards a low ECS for a doubling of CO2, still shows that net feedbacks are positive, so yes, nothing here suggests that this bit of “settled science” has become unsettled …

You guys have a weird definition of “business-as-usual.” The RCP8.5 is not a BAU case. BAU does not imply that the current trend goes on forever, but rather that it evolves in a relatively natural way. For example, the widespread (beyond the U.S.)adoption of natural gas fracking technologies in the future would logically be part of a BAU scenario. Compare the RCP8.5 global CO2 emissions out to the year 2040, with the reference case from the U.S. Energy Information Agency (the EIA refers to its reference case as “business-as-usual”). In 2040 (as far out as the EIA projects), the RCP8.5 fossil fuels CO2 emissions are 16.8 PgC/yr, while in the EIA BAU scenario they are 12.4 PgC/yr.

Now that the climate sensitivity seems lower, the preferred emissions scenario becomes higher?

Global fossil fuel emissions are currently tracking above those in RCP8.5, so business-as-usual seems rather appropriate.

https://twitter.com/KHayhoe/status/438736424662081536

Ed.

Ed,

That’s pretty short-term thinking. http://www.climatechange2013.org/images/figures/WGI_AR5_FigTS-19.jpg

BAU is not a static technology scenario, but rather a dynamic one (largely free from government interference).

-Chip

There is a robust way out of this. A paper by Lewis, Forster, Hawkins and Mosher. Agree on what are the public observations and compare them in a scientific way to models output.

It’d be the sensitivity paper to end all sensitivity papers.

Maybe add James Annan to the list of authors?

I must say, for your average fairly intelligent, scientifically-minded layperson, this is a somewhat conflicting and confusing addition to the debate centred around AGW. The whole issue of climate sensitivity to CO2 is dogged by conflicting accounts based on assumptions backed up by only tentative observations. In essence, it does define whether AGW is future CAGW.

People here delving into the minutiae of this subject does little to facilitate the basic communication of whether man-made global warming is real, if so, significant or not, if significant, how significant. One talks about climate sensitivity to CO2 but really, CO2 is only a small part of the story: the bulk of ECS comes from the assumption (unproven) of positive water vapour feedbacks creating an amplification of warming.

[snip – we are not going to discuss here whether CO2 increases come from fossil fuel burning – it does.]

So we are left with a bunch of climate scientists and sceptics arguing over a hypothetical climate sensitivity based upon a theoretical assumption of CO2/water vapour feedbacks, based itself on an a priori assumption that increased atmospheric CO2 is due in total to an imbalance created by the very small contribution from fossil fuel emissions accumulating, somehow giving us the observed 43% increase. Sounds unlikely to me. Perhaps more likely is a general increase in CO2 (fossil and natural) created by deforestation removing natural carbon sinks, remembering that CO2 is, in essence, plant food. This of course is a totally different issue, requiring a totally different solution.

So debate ECS and TCR all you like – in essence, all they are is a crystal ball through which is reflected a hypothetical possible future climate. They say very little about the relative impact of natural climate variation, internally and externally forced (particularly solar). They only give us a largely theoretically generated estimate of how increasing CO2 affects global surface temperatures. Meanwhile, the real world continues to generate real climate/weather events, like shifting jet streams and 17 and a half years of no statistically significant rise in surface temperatures.

I quote Piers, Jonathan and Ed:

“Climate sensitivity remains an uncertain quantity. Nevertheless, employing the best estimates suggested by Lewis & Crok, further and significant warming is still expected out to 2100, to around 3°C above pre-industrial climate, if we continue along a business-as-usual emissions scenario (RCP 8.5), with continued warming thereafter.”

That would be the canonical theory from IPCC that totally ignores observations of nature. There is no doubt that there is no greenhouse warming now and there has been none for the last seventeen years, two tyirds of the time that IPCC has existed. There is something wrong with an allegedly scientific organization that denies this observed fact. The only responses I have seen are laughable attempts to find that missing heat in the bottom of the ocean or in other contortions of reality. And not one of them has attempted to apply laws of physics to the absorption of IR by carbon dioxide. It so happens that in order to start greenhouse warming by carbon dioxide you must simultaneously increase the amount of carbon dioxide in the atmosphere. That is necessary because the absorbence of that gas for infrared radiation is a property of its molecules and cannot be changed. Since there has been no warming at all in the twenty-first century we have to look at twentieth century warming and see how tyey meet this criterion. There are two general warming pncidents in that century, plus a separate one for tye Arctic. The first warming started in 1910, raised global temperature by half a degree Celsius and then stopped in 1940. The second one started in 1999, raised global temperature by a third of a degree Celsius in only tyree tears, and then stopped. Arctic warming started suddenly at the turn of the twentieth century after two thousand years of slow, linear cooling. There is also a warming that starts in late seventies and raises global temperature by a tenth of a degree Celsius per decade that is shown in ground-based temperature curves. Satellite temperature curves indicate no warming in the interval from 1979 to early 1997 which makes that warming a fake warming. Fortunately we do know what carbon dioxide was doing when each of these warmings started, thanks to the Keeling curve and its extension by ice core data. And this information tells us that there was no increase of atmospheric carbon dioxide at the turn of the century when Arctic warming began. And there was no increase either in 1910 or in 1999 when the other two warming periods got started. Hence, there was no greenhouse warming whatsoever during the entire twentieth century. This makes the twentieth century entirely greenhouse free. The twenty-first century is also greenhouse free, thanks to that hiatus-pause-whatchamacallit thing. And this takes care of your statement that “Climate sensitivity remains an uncertain quantity.” It is not uncertain any more but has a value of exactly zero.

The numbers in that quote from the blog post come directly from Lewis & Crok – so they would strongly disagree with you.

Ed.

“There is no doubt that there is no greenhouse warming now and there has been none for the last seventeen years, two tyirds of the time that IPCC has existed. ”

The existence of “greenhouse warming” is not in doubt. That is the most well understood part of climate science. The uncertaintly is due to other effects that may add to the greenhouse forcing to produce the variation in temperature changes that we are observing, some of them natural, some anthropogenic. There are a lot of possibilities.

The upward progress of CO2 in the atmosphere is undisputed and so is the upward progress in temperature in the 20th century.

Ed & Piers –

Hi, thanks for the post. I have a few questions.

In Figure 1, isn’t that just showing that TCR < ECS in the models?

Regarding Figures 2&3, starting with Fig 2 TCR panel, if you draw a line straight up from 1 unit forcing you encounter a model temperature change spread of 0.3 to 0.6 C, to my eyes. You note that there is a visible offset such that the model spread is somewhat high compared to the observation-derived ellipses, but the surface mask differs between the two. So in Fig 3, the comparison is rectified by subsampling the models on the HADCRUT4 mask.

I have 4 questions about that comparison:

1. In the subsampled comparison in Fig 3 there are now what look like about 5 models giving 0.1 to 0.6K temp change from 0 units forcing (look at the vertical axis) in both the TCR and ECS figures. Is this an error?

2. In the equi-sampled comparison the model mean is pulled down, improving the model-obs fit. One interpretation is that, if HADCRUT4 had the same spatial coverage as the models, the TCR inferred from observations would be higher and the clusters would line up better in Fig 2. Another possibility is that in the unobserved regions, the models run too hot, and if this were fixed, the clusters would also line up better in Fig 2. Not having read the Jones et al paper, how do they distinguish these two possibilities?

3. In the HADCRUT4 subsample, drawing the line up from 1 unit forcing in the TCR panel there is now a much wider range of temperature changes in the TCR panel, from 0.1 C up to a faint little yellow dot at 0.9 C (let me guess, the UVic model?). Some models thus run even hotter in the subsampled region compared to globally. In other words, it's not just that the average TCR is lower in the subsample, but the overall uncertainty in model behaviour is higher, as the entire fan of dots spreads out a lot more. It appears that the model behaviour in the unobserved regions offsets their representation of TCR everywhere else. I don't know what fraction of the surface is missing in the HADCRUT4 mask you use, but isn't it strange that by including it (going from Fig 3 to Fig 2) there is quite a noticeable compression of the distribution of TCR estimates?

Suppose, for instance, that the difference between the figures was that-for models-Fig 3 included only the SH and Fig 2 included the whole world. So we'd look at Fig 3 and say the models are all over the map on TCR in the SH. But then Fig 2 says they line up much more tightly once we add in the NH. So within a typical model, the NH offsets what happens in the SH (in this example). Models that are relatively warm in the SH are relatively cool in the NH and vice versa, and by averaging globally they all cluster together. That would suggest to me that there's something systematically important about the regions left out of the subsample, and problems in how the models represent the unobserved regions are offset by biases in the observed regions. Or is there another explanation of why the distribution is so much smaller in Fig 2 compared to Fig 3?

4. Presumably the distribution of unobserved regions is not random. Would I be right in assuming that the omitted regions are mostly in the Arctic?

Hi Ross,

Will leave Piers to answer the questions on Figs. 2 & 3.

But, Fig. 1 shows more than TCR < ECS. Because TCR increases in warmer climates due to less efficient ocean heat uptake, this results in an underestimate of the actual warming seen in the models using the TCR derived from a 1% run from pre-industrial climate with the same model.

And, there is missing data in HadCRUT4 in the Antarctic, South America and Africa too.

cheers,

Ed.

Ed: “Because TCR increases in warmer climates due to less efficient ocean heat uptake…”

I assume the higher TCR in warmer climates is derived from the models. Is there observational evidence backing that up?

My understanding is that the evidence comes from models, but is backed up by a physical understanding.

Ed.

It’s from models – but theoretically there are two competing effects. A greater forcing rate, leads to a greater vertical gradient of temperature in the ocean which is more effective at moving heat downwards.

TCR by definition is < ECS and ECS depends only on CO2 levels. ECS remains constant unless feedbacks are non-linear. We know that feedbacks have not caused the oceans to boil away over the last 4 billion years so H2O/cloud feedbacks eventually become negative.

Fig question

1. points on axes are missing data – ignore these

2. You are right I think – it could be either or a combination of both. This isn’t in Jones et al. Jones et al. is just the masked data and a nice paper. I’ve only just made the plot and haven’t done any analysis. The point is though that lack of coverage might make a difference

3 – agree and haven’t looked at this at all – worth a delve!

I have a question for Piers Forster, which I’d like to follow with a more general comment about the definition of various climate responses we call “sensitivity”. Piers, in your 2013 JGR paper evaluating estimates of TCR and ECS (“equilibrium climate sensitivity” but see below), you assume the feedback parameter α to be time invariant. However, multiple studies have concluded that α is likely to decline with time as a result of slowly evolving changes in feedbacks and in the geographic pattern of climate response. While linearity may be a reasonable assumption for estimates based on response to an instantaneous forcing applied 150 years earlier (Andrews et al 2012), it may be less useful for estimates derived from recent forcing increases. Can you give a rough estimate as to how much your ECS value of 3.22 C in Table 1 might rise if the values in that Table had been based on real world forcing data from, say, the most recent decade or even decades of significant increase in forcings? My question is not designed to seek a precise answer, but rather a judgment about the legitimacy of assuming time invariance for the parameter relating radiative restoring to temperature change. My more general comment follows.

It strikes me that climate “sensitivities” encompass a larger multitude of phenomena than is sometimes acknowledged. All are “observationally based”, and all are also model based (one can’t “observe” a forcing). I’d like to suggest at least four, ranging from the most short term, low value responses to the responses with the longest duration and highest sensitivity values. 1) TCR, based on temperature change at the time of CO2 doubling. 2) “Effective climate sensitivity” (CS-eff), also estimated under non-equilibrium conditions, but designed to apply the value of α derived under those conditions to an equilibrium state, where CS-eff = F(2xCO2)/ α. 3. The usual notion of ECS, based mainly on analysis of positive and negative feedbacks. I think one could add “paleosensitivity” as a subset of this category. 4. “Earth system sensitivity”, which estimates temperature change if 2xCO2 forcing is followed to a true equilibrium that includes the effects of long term (millennial) feedbacks from ice sheets, vegetation, and the carbon cycle. A typical value is 6 C. Unlike ECS, this is probably the closest of the four to a true “equilibrium” sensitivity, but least relevant to the near future.

While categories 1 and 3 (TCR and ECS) are routinely distinguished, 2 and 4 receive less attention. My particular concern is the possibility that CS-eff and ECS may inappropriately be conflated although they describe different phenomena. If that is the case, low values for CS-eff and higher values for ECS may both be accurate climate descriptors even if they disagree substantially.