The time at which the signal of climate change emerges from the ‘noise’ of natural climate variability (Time of Emergence, ToE) is a key variable for climate predictions and risk assessments.

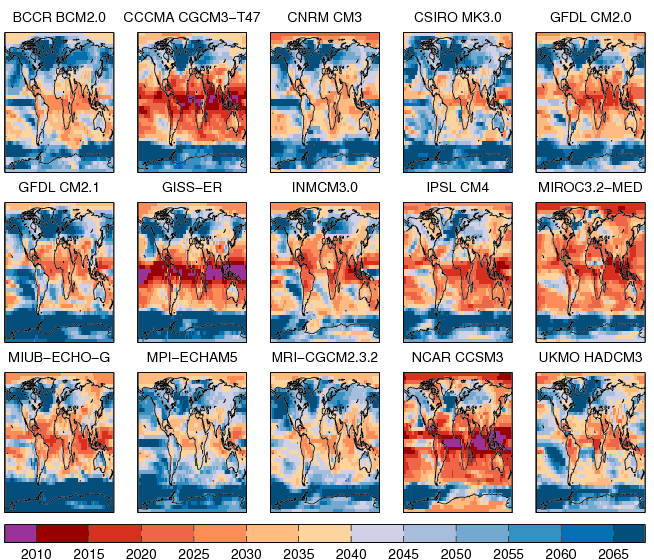

The figure below shows the ToE for a range of GCMs. ToE occurs several decades sooner in low latitudes, particularly in boreal summer, than in mid-latitudes, because the variability tends to be far smaller in the tropics (also see Mahlstein et al.). However, there is considerable uncertainty in when ToE occurs.

Importantly, the uncertainty in ToE arises not only from inter-model differences in the magnitude of the climate change signal, but also from large differences in the simulation of natural climate variability. Narrowing the uncertainty in ToE will require better understanding of the causes of climate variability, especially as the GCMs analysed tend to have too large variability in the extra-tropical regions.

The full analysis discusses this all in more detail.

Hawkins, E., & Sutton, R. (2012). Time of emergence of climate signals Geophysical Research Letters, 39 (1) DOI: 10.1029/2011GL050087

Ed,

There is also the ToE of the ameliorating effect of mitigation, i.e. the period of latency during which we do not know if the intended effect is occurring. At first view this seems doubly difficult as we are uncertain not only of the mitigation signal but of the underlying warming effect that is being mitigated.

Given that we believe that we can by our actions both warm the planet, mitigate against that warming, and finally arrive at some prefered outcome. We could think of that whole process as an excercise in climate navigation.

Let us say that the preferred outcome is to achieve the no more than 2ºC above pre-industrial goal. Let us say that we have a plan of action designed in our best judgement to meet that goal. It would seem obvious that we would welcome some inkling as to whether that plan was proving to be ineffective, insufficient, just right or perhaps unnecessarily punishing.

Viewed as an excercise in navigation, given that we could, if we wished, pull on the helm, i.e. vary the controlling factors, be they emissions, land use, etc., and thereby alter the underlying trajectory. How long would it be before we could ascertain that this new, preferable trajectory was being achieved?

In my judgement, the prospect of maintaining a significant mitigation strategy though an extended period whilst having little inkling as to its worth is deeply problematic.

In my understanding, the way that we are currently using the big GCM simulators is not well suited to the problem of providing navigational aids. The models do not commonly run with assimilated data for the oceans and the current warming pattern and hence are not in agreement with the climate state prior to projection forward. Even if that was the case, that real world climate state would not necessarily be correctly positioned with respect to a modeled climate attractor, e.g. a model would initially embark on a relaxing transient. Whereas the GCMs might be able to closely simulate historic climate in a generic sense, I do no think they simulate in the same sense that a flight simulator does.

Put as a challenge. Given a simulator, but with sight of only its past performance, but otherwise no inkling of its sensitivity or any other data that could only be gleaned by running it forward, and with no ability to perform multiple runs, just like the real world challenge we face. Knowing the goal, and having control of anthropogenic factors going forward, I have doubts as to how well are we likely to perform with respect to that goal, taking into account known practicalities and any downsides to mitigation.

If we attempt mitigation, I can think of no greater climate science requirement than the need for some timely method of discriminating the underlying trajectory.

For me, there is very little interest in continuing to simulate regions of future climate which we have little interest in exploring for real. I would much rather know as much as possible about the climate terrain between here and the 2ºC goal. I am more interested in knowing what the ongoing underlying trajectory is, than precisely why it is what it is. In large part, this seems to be a signal in noise discrimination problem.

It would be lamentable, if we were well prepared to advise on the need for mitigation but unprepared to advise on how to manage mitigation going forward through treacherous social, economic, and political waters.

My question is, given that we intend to mitigate, do you think that we have built the correct tools, constructed the correct techniques, necessary to best advise on the navigation of safe passage, if that is possible, between here and 2ºC, given the social, economic and political imperatives that we face?

Alex

Thanks for the comment Alex – you raise some really interesting points, and I like your navigation analogy. Were you imagining something like a regularly updated ‘best’ path of emissions to avoid 2C/3C/4C at some confidence level?

A simple answer would be that we probably don’t perform the simulations required at present. However, the questions seem to fall naturally within the ‘Detection & Attribution’ framework, and I could see some ways of extending that methodology to try and answer some of these issues. This may be particularly relevant also for detecting any climatic changes due to the projected decline in tropospheric aerosols (and potentially due to ozone recovery) in the next couple of decades.

Will think further about how one might go about simulating the processes and steps involved.

cheers,

Ed.

Ed,

I’m a postgraduate student in xiamen university.I’m interested in climate change.When I read your article, I was puzzled by the method that the calculation of TOE.

when calculating the global air surface temperature(T_global), did you first average and then perform a fourth-order polynomial fit? Does this order of processing data affect your results? If there is any impact, how should you make a choice?

Holy