A recent comparison of global temperature observations and model simulations on this blog prompted a rush of media and wider interest, notably in the Daily Mail, The Economist & in evidence to the US House of Representatives. Given the widespread misinterpretation of this comparison, often without the correct attribution or links to the original source, a more complete description & update is needed.

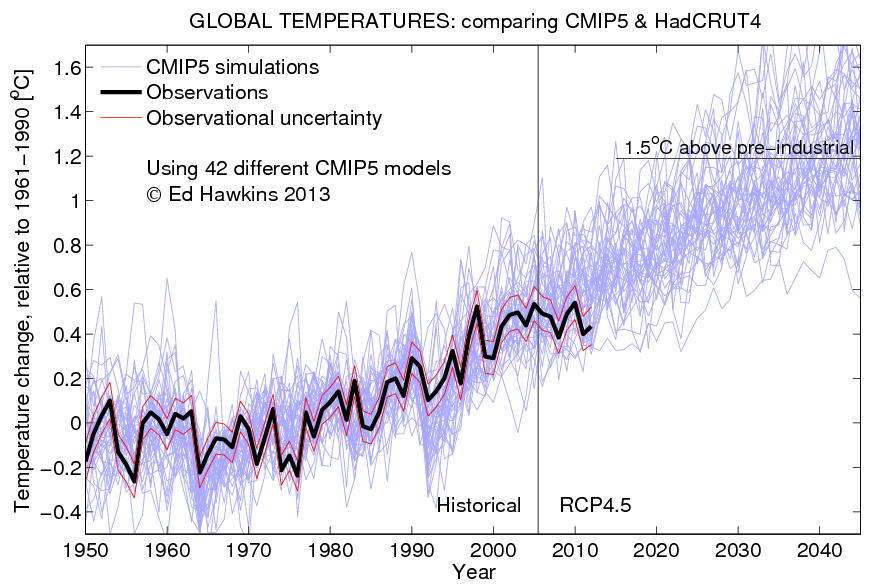

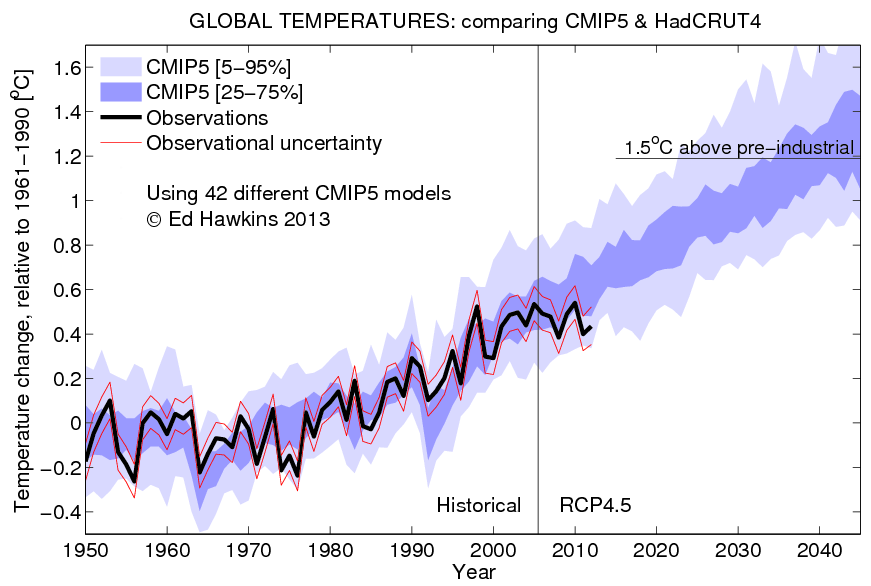

The two figures below compare observed global temperature changes with the latest projections from 42 different climate models (CMIP5) for the 1950-2050 period, using a common reference period of 1961-1990. One figure shows the individual simulations, and the other shows an estimate of the 50% and 90% confidence intervals.

What can be learnt from this comparison? Simply, global temperatures have not warmed as much as the mean of the model projections in the past decade or so and are currently at the lower edge of the ensemble of simulations. However, there are simulations which are consistent with the observations.

Interpretation of this comparison is not straightforward for many reasons (see below).

But, what does this mean for the future? I welcome suggestions for a ‘likely’ range for the global temperature mean of the 2016-2035 period – the IPCC AR5 will give their verdict on this in early October…

UPDATE (11/08/13): The second figure below was recently used in a U.S. Senate hearing (at about 3h27m).

UPDATE (23/09/13): A version of this comparison was shown on BBC News today as part of their preparatory coverage for the IPCC AR5.

Role of aerosols and other radiative forcings

These particular simulations use historical radiative forcings (greenhouse gases, solar activity etc) until 2005, and an assumed ‘scenario’ of radiative forcings (named RCP 4.5) from 2005-2050. Firstly, this is just one scenario of post-2005, and there are others – this uncertainty is not represented here.

One of the key uncertainties is in the role of aerosols (small particles emitted through fossil fuel burning which tend to reflect sunlight, interact with clouds and cool the climate). All the RCP scenarios assume that atmospheric concentrations of aerosols decline at slightly different rates post-2005, but all are perhaps optimistic. At the very least this does not fully sample the uncertainty in post-2005 aerosol emissions.

Why has the globe warmed less than projected in the last decade?

So, why might the simulations appear too warm? One possibility is that aerosol emissions have not declined as rapidly as assumed since 2005, causing the simulations to appear too warm. However, observations of aerosol emissions are somewhat uncertain. Also, no volcanic eruptions are present in the post-2005 simulations, although it is possible that recent moderate eruptions have had a cooling influence. In addition, solar activity post-2005 has been weaker than the simulations assume. All of these effects would make the simulations appear too warm.

There is also some recent evidence that the models with the very highest climate sensitivities may be inconsistent with the observations. Uncertainty in the observations of global temperatures is also not negligible, as shown by the red lines.

The final possible explanation is that internal climate variability has reduced the rate of warming this decade, and that some of the additional energy may be in the deep ocean instead of the atmosphere.

Some more technical considerations:

Although the broad qualitative picture is fairly robust, note that there is also sensitivity of the quantitative results to:

(1) choice of reference period (1961-1990 is used here)

(2) choice of observational datatset (HadCRUT4 is used here)

(3) choice of ensemble members – I have picked 1 ensemble member per model, but note that significant differences are possible in different ensemble members

with (1) being perhaps the most important. Also note that these 42 different simulations are not independent, due to similarities between the models used. For example, 8 simulations are from the NASA-GISS family of models. Also note that I have ‘masked’ the simulations to only use data at the same locations where gridded observations in the HadCRUT4 dataset exist.

Hi Ed,

I’m glad to see you put your name on your blog graphs! 🙂

It’s good to add the uncertainty. But it might be a bit better to add observational uncertainty to the model spread. That way people don’t have to try to do the square-root of the sum of the squares of the uncertainties placed on different things in their heads. When people use the graphs method you use, they tend to over estimate the uncertainty. (For example: the 95% spread for ‘models if they were mis-measured’ is less than sum of ‘95% uncertainty in models’ + ‘95% uncertainty in observation’, as we all know from the triangle inequality. But those who do not know can always convince themselves by using monte-carlo and adding measurement noise to each model run, then finding the spread with the noise added.)

Hi Lucia,

Learnt a lesson from last time about putting my name on figures!

Point taken about adding uncertainties, but for a simple graphic representation I think this way works better visually.

Thanks,

Ed.

Actually, I think the way I suggest works better. I’ll slap on my AR4 versios to show you how it looks. (I haven’t put those on there.)

The only problem is that the stated uncertainties are different for HadCrut4/GIStemp and NOAA.

But really, I’m not saying your way is bad. I just think the other way is easier. If you disagree– ok.

Wouldn’t we expect observations to be outside the 5-95% range one tenth of the time anyway? A point I made here: http://edavies.me.uk/2013/03/rose-again/

Regarding predictions, here’s something I’ve been wondering about: my understanding is that that the PDO affects the ENSO – one phase of the PDO causes many more La Ninas than the other or something. Is that even vaguely right? How much do we know about the history of the PDO? Would Lonnie Thompson and co’s recent Quelccaya core with, it’s said, a long record of the ENSO give any useful information on the likely performance of the PDO?

Thanks Ed – yes, we should not be surprised if the observations fall outside the uncertainty occasionally. The question of the ‘reliability’ (i.e. are the forecast probabilities correct) is an interesting one and discussed previously, but these types of single scenario projection are definitely not designed to be probabilistic forecasts. But, this decade has been rather unusual, and needs explaining.

As for the PDO-ENSO connection – the proxy data may tell us about long timescale variability in ENSO, which may be linked to PDO variations, but I don’t think it is fully understand how the two modes of variability interact.

Thanks,

Ed.

My point was going to be the complete opposite. If I understand correctly the projections begin in 2005. By eye, the spread in the 5-95% uncertainties are about 0.6oC in 2013 and back in 2005 the observations were slap bang in the middle of the model mean. So the question might be how could we expect the observations to fall outside the model spread of projections given the short period of time? Temperatures just don’t move quick enough for that to happen so soon.

I just can’t get over the fact that we are talking about tenths of a degree change in temperature and comparing it to a model mean with ~0.6oC spread. It’s like your throwing a dart at a six foot wide board.

Hi HR,

If you look at the figure with all the simulation lines, it is clear that decades with little or no warming do occur in the simulations, so it is not surprising that the observations can go from the middle to the edge of the range. 1967 is near the top, and 1977 near the bottom for example. And, yes, the spread is 0.6K, but the trend in the simulations is larger than this.

What is your estimate for a ‘likely’ range of temperatures in 2016-2035?

Ed.

I agree, that the global mean “land-surface” temperatures should be largely constrained to the inner blue range and rarely exceed the light blue. What’s normal is that the observations touch the boundary 6 times in 6 decades. As the modelled range is 5% to 95%, statistics have played out near-on perfectly.

As the temperature trend is chaotic and not random, there are always apparent trends that emerge in the temperature data. Despite this, we’d be just as wrong to say that we expect an upward rebound to fit the trend. In fact, on timescales of less than 20-30 years (sub-climate timescales) it is worth noting that there are lots of trends in the observation data throughout the time series here.

Hi Ed,

Would it be worth looking at the probability distribution of 10-year trends both in observations and models to decide how unusual the last decade was? Similar to what Kay et al. (GRL 2011) have done for Arctic sea-ice trends.

Obviously, for the period from 2005 on the comparison isn’t fair any more, as you very clearly point out.

Hi Steffen,

Yes – this is interesting. A paper by Easterling & Wehner (2009) examined decadal trends for global temperatures – but this could be updated for the CMIP5 simulations also.

cheers,

Ed.

Interesting reference! But I think one should go beyond Easterling & Wehner. They only looked at the individual probability distributions of observed and modeled trends (their Fig. 3). But what one wants to know here is how often one would expect a certain mismatch between modelled and observed trends just by chance. That would mean looking at the joint probability distribution.

To illustrate my point: take your figure and imagine we are in 1975 and look back at the last pentade or so. Would we have concluded that the models must be terribly wrong? After all, the observed temperature during this time fell from the 95 to the 5 percentile of the model simulations…

Sounds like an interesting idea for an analysis! And no – I don’t think we would have concluded that the models were wrong in 1975!

cheers,

Ed.

Is there a way to download the ‘projections from 42 different climate models (CMIP5) for the 1950-2050 period, using a common reference period of 1961-1990’ in tex file format?

I would like to try something and I use Excel for preference.

Hi DocMartyn,

One possibility is to use the Climate Explorer, which allows you to download the data:

http://climexp.knmi.nl/selectfield_cmip5.cgi

Ed.

When you talk of “choice of reference period”, do you mean the period where all the models are normalised to or the period of observational data input to the models?

When you talk of “choice of observational datatset”, are you using the oberserved data just within the reference period or up to 2006?

Do you permit the models to use “correction factors” and for which dates?

Could you please clarify what exactly the models have been made to do??

Interested to know which models provide better “fit” and what is different about them when compared to the others.

Hi blouis79,

(1) The reference period refers to the period where the models are normalised to have a zero anomaly.

(2) The choice of observational dataset refers to the fact that I have used the HadCRUT4 dataset, whereas I could have chosen the GISS or NOAA versions for global temperatures.

(3) The data is plotted as it comes out of the model – not corrected, apart from to set the reference period temperature to zero.

(4) The models have been used to simulate the climate of the past ~160 years. The inputs include observed GHG concentrations, solar activity, volcanic activity, sulphate aerosol emissions etc. They then simulate the future with similar inputs based on assumptions on future GHG concentrations etc (this is the Representative Concentration Pathway – RCP – referred to).

(5) Some models are better than others – there a multitude of differences between them so it is not really possible to say why. Some factors might include the complexity of representation of various processes or the spatial resolution of the numerical grid used etc

Hope this helps!

Ed.

Thanks Ed.

I inderstood that the models have a vast range of input data, some of which you mention at (4). The models also have “correction factors” as part of their input data.

So I presume if you run the models as they are, then they do come with all of the above.

I’m curious to see how the models would perform on a historical basis if the all the model input data is pruned to only cover the reference period and you only feed them with temperature data from that period. Then there is more time to see how the models can match observations going forward.

Hi blouis79,

I think you misunderstand how climate models work when producing these historical simulations – they are not fed any temperature information, and I don’t know what you mean by “correction factors”?

http://en.wikipedia.org/wiki/Global_climate_model

cheers,

Ed.

I have looked at the code for ModelE, which is publicly available. I didn’t think others do not have publicly available code.

The ModelE (and presume all of them) have a load of input data on various parameters (available here: http://portal.nccs.nasa.gov/GISS_modelE/index.pl), and correction factors are part of that. ModelE calls them “nudging related variables”.

See for example:

MODULE NUDGE_COM

!@sum NUDGE_COM contains all the nudging related variables

!@auth

!@ver

USE MODEL_COM, only : im,jm,lm

c USE DOMAIN_DECOMP, only : grid

IMPLICIT NONE

SAVE

!@param nlevnc vertical levels of NCEP data

INTEGER, PARAMETER :: nlevnc =17

!@var U1, V1 NCEP wind at prior ncep timestep (m/s)

!@var U2, V2 NCEP wind at the following ncep timestep (m/s)

REAL*4, DIMENSION(IM,JM,LM) :: u1,v1,u2,v2

REAL*4, DIMENSION(IM,JM-1,nlevnc) :: UN1,VN1,UN2,VN2

REAL*4, DIMENSION(nlevnc) :: pl

!@var netcdf integer

INTEGER :: ncidu,ncidv,uid,vid,plid

INTEGER :: step_rea=1,zirk=0

!@var tau nuding time interpoltation

REAL*8 :: tau

!@param anudgeu anudgev relaxation constant

REAL*8 :: anudgeu = 0., anudgev = 0.

END MODULE NUDGE_COM

Hi blouis79,

For some particular types of simulation, nudging to observations is used. And, is the nudging used in these historical simulations – no.

Ed.

Ed, Very good post. I have a question. It appears that there was a large drop in temperature about 1991-1992. I’m assuming that was a volcanic eruption. Or was it something else?

Yes – the eruption of Pinatubo in 1991 is responsible for the drop in temperatures. Other eruptions in 1982 (El Chichon) and 1963 (Agung) are also visible (especially in the simulations).

Thanks,

Ed.

Did you smooth the data? It appears that the models show a much bigger effect than the observations.

Thanks

Only annual means are used in everything above. Do you mean that the models show a larger effect to Pinatubo than the observations? If so, yes I agree, and this is interesting and a matter of further research as to why.

Ed.

Sorry for my ignorant question, but why do the model uncertainties not diverge from zero at the start point, 1950. How is it that even the starting points are uncertain?

Hi Roy,

The simulations actually start around 1850, and they are presented as anomalies from the mean of 1961-1990, so they should not be expected to start from the same points.

cheers,

Ed.

Ed,

I think that this is comparing the differences between various climate models. All fine and good.

Are you aware of any covariance analysis (see http://en.wikipedia.org/wiki/Kalman_filter without external observations) that look at the propagation of uncertainty within ONE model. Basically, every variable (AND parameter) is assumed to have an initial uncertainty with driving noise if appropriate. These statistics are propagated in time around the mean and give an indicator of how certain we are of our mean. Its sort of like a Monte Carlo analysis but far cleaner.

For example, if the mean increases 10 degrees in twenty years and has an uncertainty of 1 degree about the mean, we might be inclined to believe the computer simulations. But, if the mean increases 10 degrees in twenty years but has an uncertainty of 20 degrees about the mean, we might be inclined to believe the computer simulation needs further refinement.

I think the issue with that suggestion is the size of the problem – there are well over 10^6 dimensions!

There are certainly experiments where the same future simulations have been performed with different parameter settings in a single model – e.g. the Met Office QUMP experiments, or the climateprediction.net project. These projects find a range for climate sensitivity that is comparable with the multi-model ensemble.

cheers,

Ed.

Dear Mr Hawkins thanks for the opportunity to correspond

I noticed met office has published their projections publically using a new experimental model that is indicating a continuing period into this coming decade of flattening global temp’ trends with a minimal rise

They do not use CMIP 5?

Hopwever

The Australian projections for the new IPCC report still have the hockey stick and the trend line is different to the current published Met office projection?

Will there be any co-ordination of the various agencies and there projections to bring some consistency??

Also l bring to your attention the qian and lu research paper( 2010)

titled

Periodic oscillations in millennial global mean temperature and their causes

They have predicted a global cooling.. Like many other resesarchers

4 periodic oscillations attained a constructive maximum during the time interval 1992 -2004

21.1 yr

62.5 yr

116 yr

194.6 yr

I have read that many scientists like lockwood have measured the solar forcing of the 22yr / 11 yr solar cycle at minus 0.3 deg c per cycle? l believe

Now this assessment is not complete because as Qian and Lu have identified the 4 cycles are at max and on their way down so you must account for the forcing of these longer more powerful cycles

We are talking about constructive interference here

4 cycles overlaying constructively and going down together.

This is a form of cyclic AMPLIFICATION. Constructive interference at a sub maximum strength

The downward global temperature trend could be steeper than currently being muted

I think Qian and Lu estimate a very crude 0.1 deg C variability for EACH of the 4 cycles

That would make an additive effect? or logarithmic depending on the forcing mechanism?

Additive would yield a decline of 0.4 deg C from the cycles until minimum in the 2030’s ?

Now if forcing mechanisms turn out to be logarithmic due to feedbacks

Ie. Increased albedo, shifting Jetstream , cloud cover etc the decline could be greater

Just wondered if you had covered your bases with this

Some scientists are predicting a hockey sticky down in temps.LOL Ironic isn’t it..

The current rate of decline since 2005 is 0.4 deg c per century ( Hadcrut 4)but we are on the crest of the cyclic wave of 4 synchronous cycles!!

So the curve isn’t steep AT THE MOMENT.

The 3 of the longer cycles trend down TOGETHER at least until 2035

This set up in these 4 cycles is unprecedented in modern history or earlier?

When was the last time in history this 4 cyclic synchronicity event happened?

Do you have a similar period to compare?

Also of consideration would be that a number of cycles together on a downward phase produces a saw tooth type wave effect with a temp’drop off of a cliff so to speak.

Considering the sensitivity value of C02 is only at 400ppm currently and influence is marginal at these values?

these cycles could over power GHG very easily

We are not at doubling of c02 yet..

My thoughts is that you should include this uncertainty in the lower temperature boundary

of course that would look ridiculous. A hockey stick showing global cooling and a hockeystick showing the AGW warming. a HUMONGOUS EROR MARGIN..

LOL

but a reality

What are the probability that this global cooling could occur

Landscheidt , Archibald, the Russian scientist Abbu..?, and others

So . You say.. We don’t know the mechanism..

Is that an excuse to ignore the findings of statistical analysis of cycles.

A downturn is under way .. cycle wise ( fourier analysis)

Do you trust your models to predict the opposing forcings of a cyclic downturn vs GHG forcing.

The uncertainty band just seems to just keep getting bigger.. and rightly so..

regards

from a cycle rep’

Ed,

Would you please also give the projections for the models in which global warming is NOT man-made? After all, that is implicit alternative hypothesis.